Rhys Davies

on 24 March 2020

Building a Raspberry Pi cluster with MicroK8s

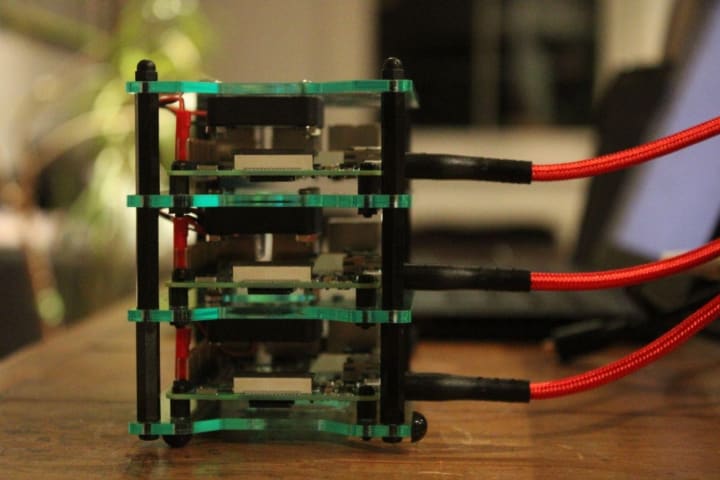

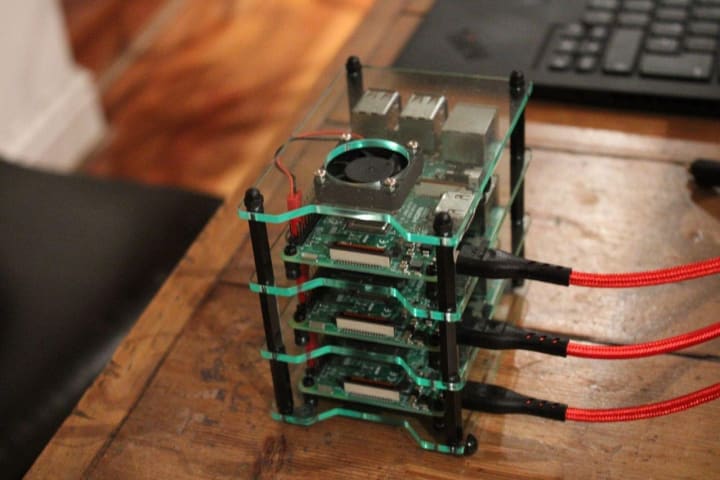

The tutorial for building a Raspberry Pi cluster with MicroK8s is here. This blog is not a tutorial. This blog aims to answer; why? Why would you build a Raspberry Pi cluster with MicroK8s? Here we go a little deeper to understand the hype around Kubernetes, the uses of cluster computing and the capabilities of MicroK8s.

Why build a Raspberry Pi Microk8s cluster?

The simple answer is to offload computation resources from your main computer to a cute little stack of Raspberry Pis. The longer answer is to give yourself, and your computer, a break to do other things and save time. You can use the cluster for resource allocation or as a separate system. For example, if you are a photographer who takes a lot of high-resolution photos, you might find that uploading, stitching, or rendering those photos can prove tedious. Instead, you could offload each photo to a Raspberry Pi. This way you have multiple things working at the same time and can get on with writing a blog about your trip.

Similarly, if you’re getting into the YouTube business you might find it takes a while to upload your videos. You have to keep a careful eye on it so that when it’s done you can go back straight away to watch cat videos. With a Raspberry Pi Microk8s cluster, you can offload the upload. It might take a little longer if your cluster isn’t that big, but it frees up some time. If anyone reading this wants to write a tutorial for one or both of these examples, get in touch.

Why use a Raspberry Pi?

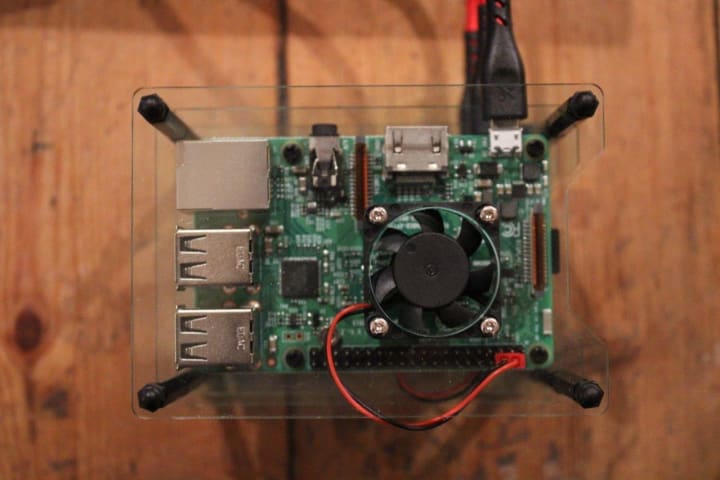

The Raspberry Pi is a series of small, single-board computers that took the world by storm. They are built and developed by a UK based charity that aims to educate and lower the bar for people getting into technology.

“Our mission is to put the power of computing and digital making into the hands of people all over the world.” – The Raspberry Pi Foundation.

What makes them great for this purpose, in particular, is that they are incredibly cheap for what you get and depending on the model you get they have a wide array of different features, hardware and capabilities. You can whip up your MicroK8s cluster, ready to go, or you can build it into home automation. Or use it as a server. A display. A weather monitor. Or anything.

Why not?

Kubernetes, open-source technology, Raspberry Pi, clustering, beer. Are all buzzwords that people like to talk about and have become, or continue to be, very popular. Knowing what those words mean is one thing but understanding them and their implications is another. A cluster of Raspberry Pis running MicroK8s satisfies four out of five of those words. And if you’re old enough and so inclined, you could hit the fifth too. If you have the capacity and you have an interest, even if you don’t necessarily have a purpose, why not?

Kubernetes

Containers have become the de facto way to run applications in a production environment. Being able to manage containers to maximise efficiency, minimise downtime and scale your operations, saves tremendous amounts of time and money. It’s a project Google started and open-sourced in 2014 based on a decade and a half of their running production workloads at scale. If you’re here because of the Raspberry Pi in the title and you don’t have a production environment to manage, skip ahead to the MicroK8s section.

What is Kubernetes?

Kubernetes is a portable, extensible, open-source platform for managing container workloads and services, that facilitates both configuration and automation. If you run Kubernetes, you are running a Kubernetes cluster. As you’ll find in the tutorial, a cluster contains a minimum of a worker node and a master node. The master is responsible for maintaining the desired state of the cluster, and the worker node runs the applications. This principle is the core of Kubernetes. Being able to break jobs down and run them in containers across any group of machines, physical, virtual, or in the cloud, means the work and the containers aren’t tied to specific machines, they are “abstracted” across the cluster.

Problems it solves

A big question for any system is how will it react to change? In fact, the whole of system theory studies the principle of systems in context. Looking at components of a system in the context of the bigger picture and their relationships to each other. Not in isolation. Kubernetes enables this sense of context. Dealing with containers and workloads in isolation can slow any infrastructure down and create more work for more people. In theory, this could work just fine on a small scale but when you get to production or your systems get more complex, you’re asking for trouble.

Containerised applications help isolate resources of a host OS, leading to increased performance. They also help segregate the resources and therefore optimise their utilisation. Kubernetes is the vessel with which all the containers are coordinated to run on a single host OS, which is a big improvement in terms of resource consumption from VMs where a host OS is needed in every VM instance.

Why you should care

Kubernetes hasn’t become a buzzword for no reason. It has a myriad of features and benefits that make it so. Ultimately it comes down to automated efficiency. What happens if a container running a work-load goes down? If traffic to a container is too high? What happens if you have so much to work to do that allocating resources is a waste of time? And what happens if you are dealing with confidential data or you need to protect your workload? Well. Kubernetes solves all of those problems and more.

With Kubernetes, you can automate the creation, removal and management of resources for all your containers. You tell the platform how much CPU and RAM to allocate to each container. It handles unresponsive, or failed containers automatically, re-allocates the workloads and won’t advertise them again until they’re back up and running. And Kubernetes lets you store and manage information such as passwords, SSH key and Auth tokens. So you can deploy and update under a layer of security without having to rebuild container images.

In fact, the latest Kubernetes candidate is available for testing and available in the snapstore. If you want to try the latest and greatest developments, finish reading this blog and head over.

MicroK8s

If Kubernetes (K8s) is as good as everyone says it is, then the next thing to try is to apply the same model elsewhere. Somewhere where resources are heavily constrained and the management of computational resources is a performance-limiting factor. How about, at the edge?

What is MicroK8s

MicoK8s is the most minimal, fastest version of K8s out there, that keeps the important features of a standard K8s cluster. It is optimised for the edge with hundreds of thousands of lines of codes taken out to be exactly what you need for managing devices. It makes single-(master)-node cluster deployments of Kubernetes easy to deploy for any purpose. There’s no need to deploy a fully-blown production-grade cluster when you’re prototyping or developing, you can test everything on MicroK8s before scaling.

Problems it solves

Typically devices and device workloads are developed to work in silos. Devices at the edge are failure-prone and managing each and everyone in isolation is cumbersome. If a master node goes down, there’s no standard way to fix a device under the downed master device. With MicroK8s you can centrally manage every device in the cluster using a simple UI. It takes the complexity out of updates and roll-backs so that developers or organisations can manage their device estate with ease.

Why you should care

Edge resource management is already a big problem. The internet of things (IoT) or the internet of everything (IoE) here, is more heavily constrained by Moore’s law than anything else. Moore’s law loosely says that every two years the speed and capability of computers will double and the price will lessen. Where most industries can deal with this by growing or adding more to a system, the edge has tight constraints on its size and number of resources.

Some of the biggest buzzwords that describe this effect are embedded industrial devices, and smart homes. The idea of connecting robots or devices in a home or a factory with the ability to communicate, learn and make decisions. That ability, without large amounts of computation or significant resource management, reaches a hard limit. With MicroK8s and its central node methodology more resources become available, become secure and the value of containers as a scalable way of running workloads is transplanted to the edge.

Cluster Computing

The idea is simple. You connect a series of computers (nodes) over a network so that they can share resources (in a cluster) and execute workloads more quickly, more efficiently or in parallel. There are three types of cluster computers, load balancing clusters, for resource distribution. High-performance clusters, sometimes called supercomputers, to pool resources for high computational cost workloads. And there are high availability clusters that are predominantly for redundancy or failure recovery. You can use MicroK8s for any of the above.

Why you should care

A cluster computer aims to make a group of computers appear as one system. In the beginning, cluster computing was almost exclusively a scaling solution for the enterprise. But since, with the rise of the other technologies discussed here, the benefits have grown to include extreme availability, redundancy resilience, load balancing, automated cross-platform management, parallel processing, resource sharing and capacity on demand. The trick is being able to manage said cluster efficiently. The trick is in K8s.

Further MicroK8s, cluster and Raspberry Pi reading

On the Raspberry Pi website, you will find the tutorial, Build an OctaPi. This is a comprehensive tutorial that uses nine (eight for the cluster, one as the client) Raspberry Pis as servers for much the same purposes as already described. It does not use any form of Kubernetes but you will be able to see the result is almost as cool.

To reiterate over the first paragraph, if you want to build a Raspberry Pi cluster using MicroK8s there is a tutorial on the Ubuntu discourse along with plenty others to start or to get tinkering with other interesting technologies.

MicroK8s is built by the Kubernetes team at Canonical to bring production-grade Kubernetes to the developer community. It’s worth reading more about. Or if you don’t want to build a cluster but have an interest in MicroK8s, there are lots of great tutorials online.

Finally, Kubernetes is the biggest buzzword here. And unless you’re a wizard or work in the cloud business you likely haven’t had much exposure to it. If this is the case I can recommend two paths. Going over to the K8s website where all the docs are, this is probably a lot more information than is really digestible right now, but it’s a good source of information. Or you can head over to the Ubuntu tutorial on getting MicroK8s doing things on your own machine. Which, will walk you through a bit more of the information discussed here.

This blog was originally for Rhys’ personal blog as an information dump for himself.